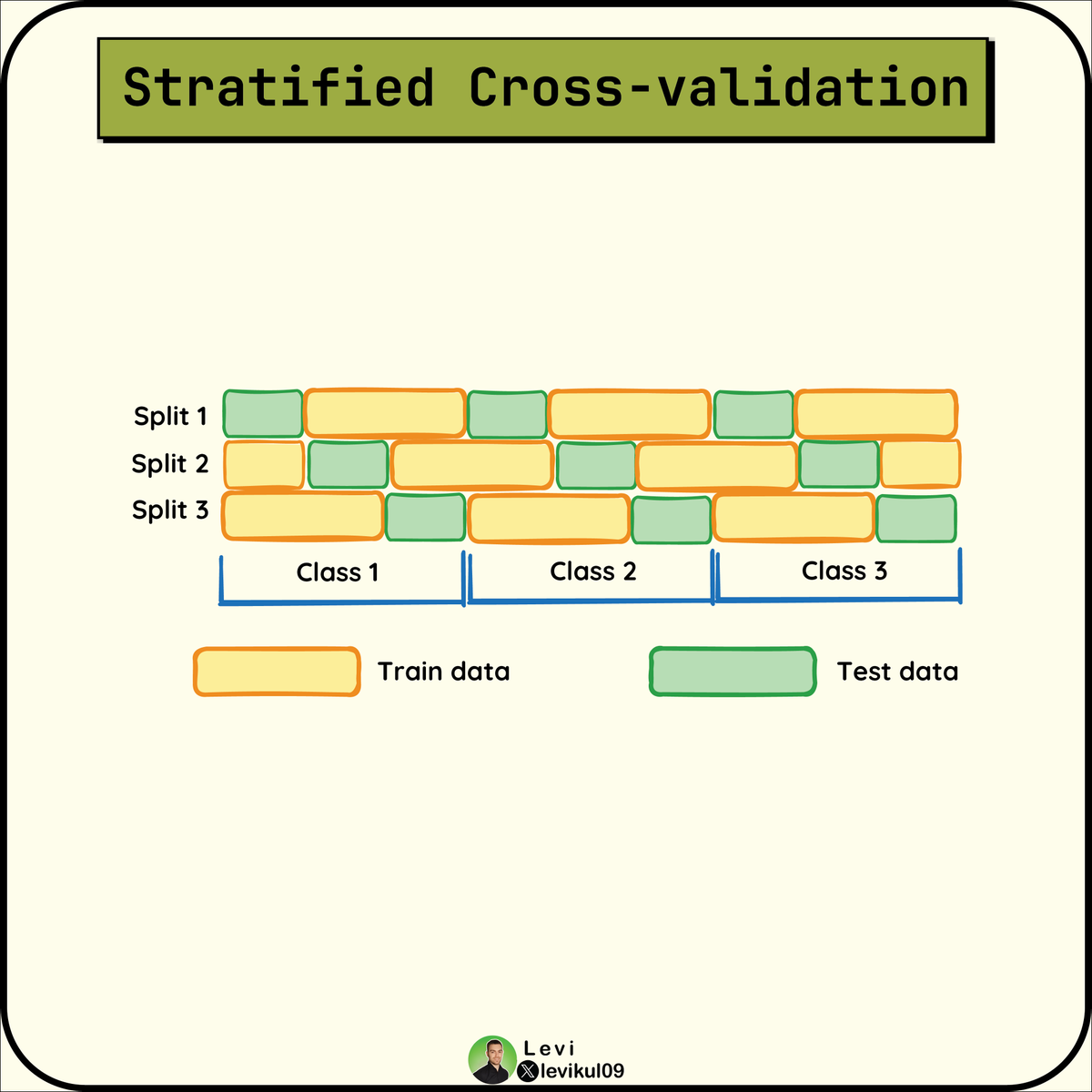

Stratified cross-validation is more reliable than k-fold for classification.

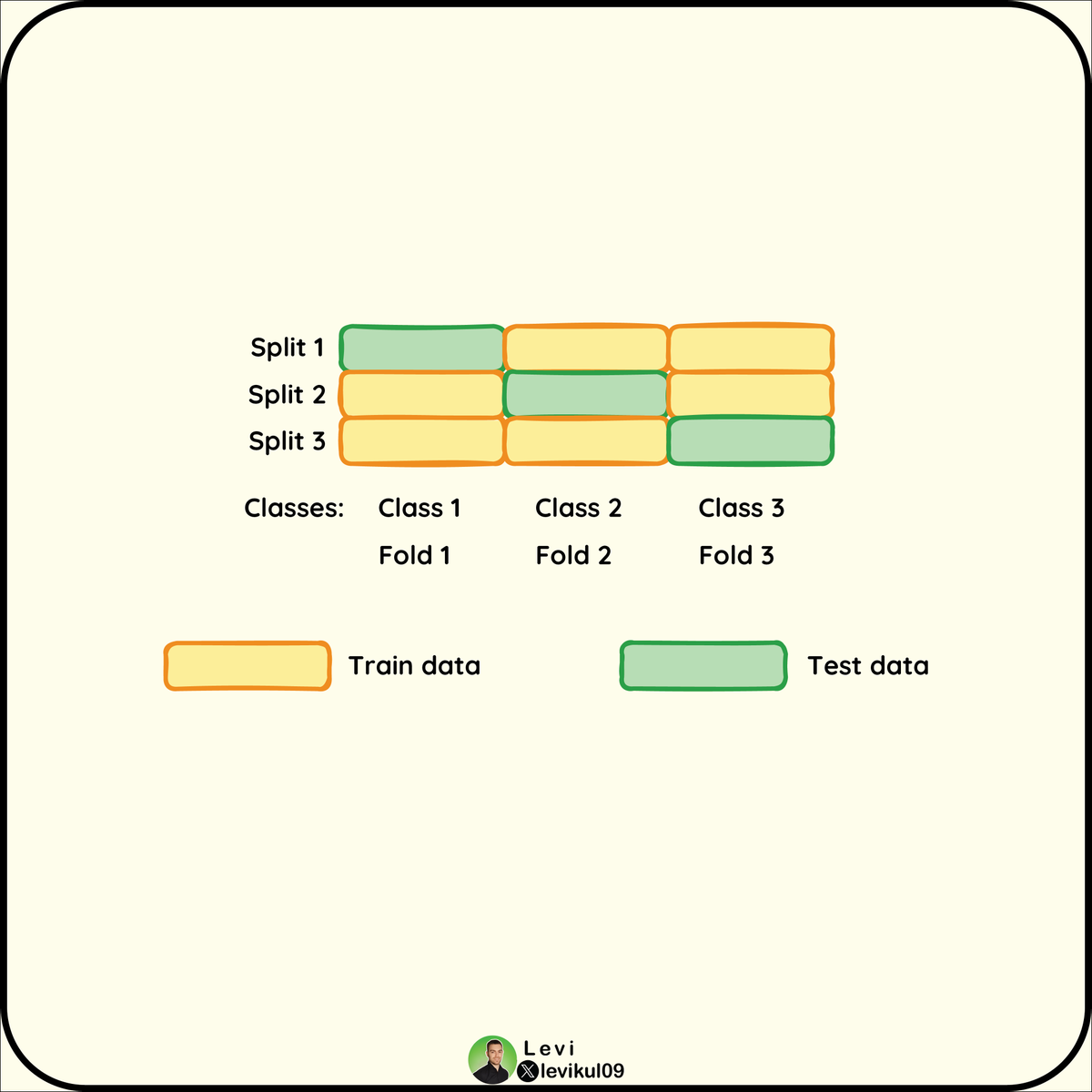

In k-fold it's not rare to see cases where 1 fold contains data from only 1 class.

For regression, however, using k-fold would be the better strategy since it's harder to make representative folds.

In k-fold it's not rare to see cases where 1 fold contains data from only 1 class.

For regression, however, using k-fold would be the better strategy since it's harder to make representative folds.

That's it for today.

I have read about this technique in the book:

Introduction to Machine Learning with Python by Andreas C. Müller and Sarah Guido

Like/Retweet the first tweet below for support and follow @levikul09 for more Data Science threads.

Thanks 😉

I have read about this technique in the book:

Introduction to Machine Learning with Python by Andreas C. Müller and Sarah Guido

Like/Retweet the first tweet below for support and follow @levikul09 for more Data Science threads.

Thanks 😉

Loading suggestions...