Consider this:

You analyze the stock market.

One stock might range from $10 to $50 over a year, while another could range from $100 to $500.

Without normalization the scales are different.

If you normalize the prices the relative performance can be compared easily.

You analyze the stock market.

One stock might range from $10 to $50 over a year, while another could range from $100 to $500.

Without normalization the scales are different.

If you normalize the prices the relative performance can be compared easily.

Consider this:

A hotel is analyzing guest feedback.

- The scores for cleanliness are low (people are rating it harshly)

- Friendliness is rated well.

If we compare these two results, cleanliness is definitely something the Hotel needs to improve.

Here is the trick:

A hotel is analyzing guest feedback.

- The scores for cleanliness are low (people are rating it harshly)

- Friendliness is rated well.

If we compare these two results, cleanliness is definitely something the Hotel needs to improve.

Here is the trick:

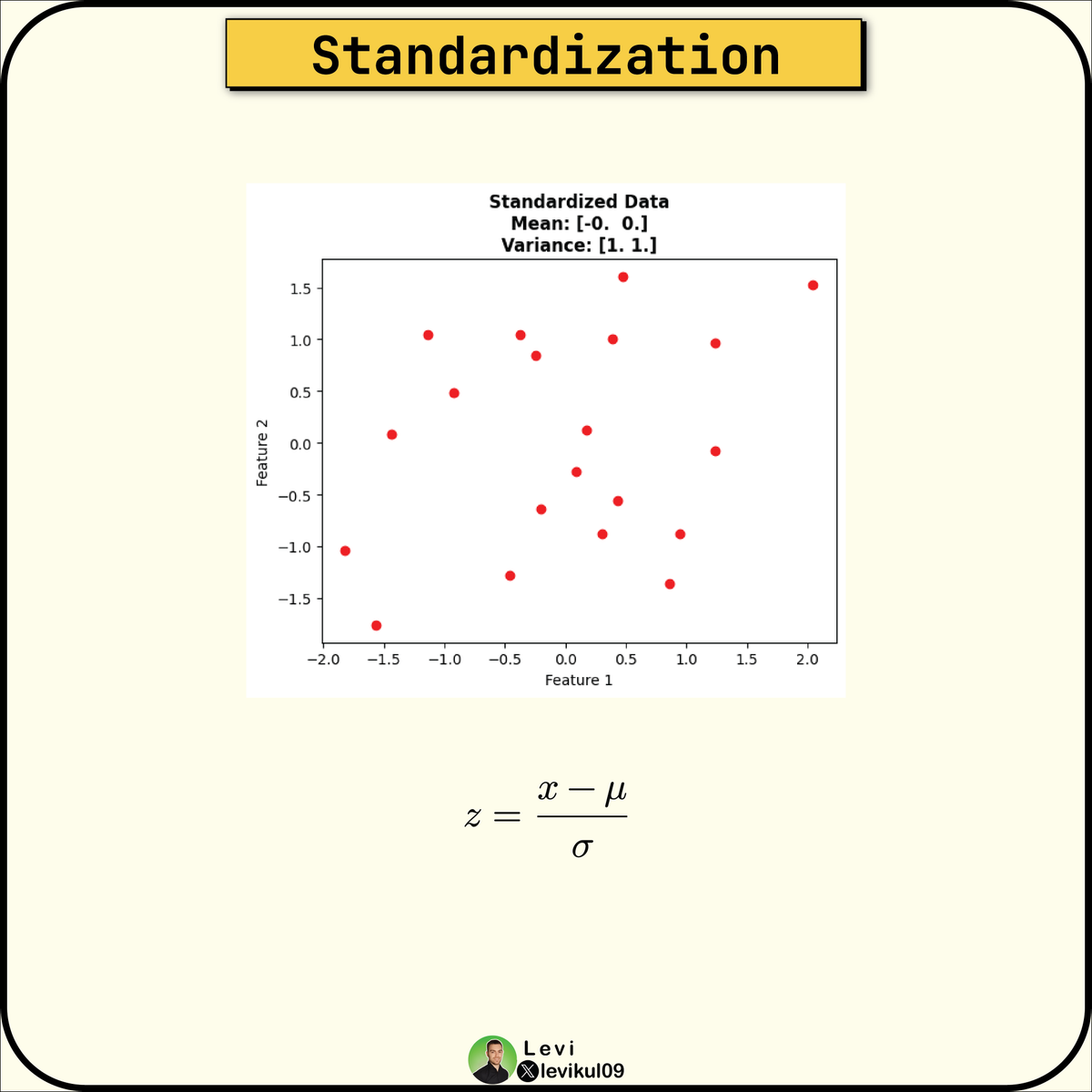

What if people generally are more critical about this attribute and they always give lower scores to cleanliness than friendliness.

We cannot compare pure feedback scores.

By standardizing the data we can compare the results and decide which areas are truly underperforming.

We cannot compare pure feedback scores.

By standardizing the data we can compare the results and decide which areas are truly underperforming.

Did you like this post?

Hit that follow button for me and pay back with your support.

It literally takes 1 second for you but makes me 10x happier.

Thanks 😉

Hit that follow button for me and pay back with your support.

It literally takes 1 second for you but makes me 10x happier.

Thanks 😉

If you haven't already, join our newsletter DSBoost.

We share:

• Podcast notes

• Learning resources

• Interesting collections of content

dsboost.dev

We share:

• Podcast notes

• Learning resources

• Interesting collections of content

dsboost.dev

جاري تحميل الاقتراحات...

![Normalization rescales the values into a range of [0,1].

It is also known as Min-Max scaling.

It i...](https://pbs.twimg.com/media/GHqHAehawAAfCGg.png)