Previously we've seen @LangChainAI ParentDocumentRetriever that creates smaller chunks from a document and links them back to the initial documents during retrieval.

MultiVectorRetriever is a more customizable version of that. Let's see how to use it 🧵👇

MultiVectorRetriever is a more customizable version of that. Let's see how to use it 🧵👇

@LangChainAI ParentDocumentRetriever automatically creates the small chunks and links their parent document id.

If we want to create some additional vectors for each documents, other than smaller chunks, we can do that and then retrieve those using MultiVectorRetriever.

If we want to create some additional vectors for each documents, other than smaller chunks, we can do that and then retrieve those using MultiVectorRetriever.

We can customize how these additional vectors are created for each parent document. Here're some ways @LangChainAI mentioned in their documentation.

- smaller chunks

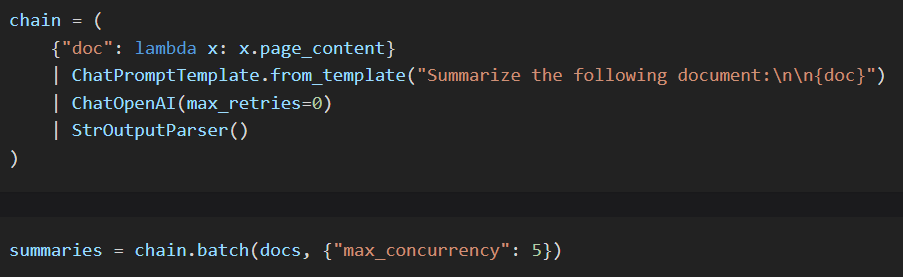

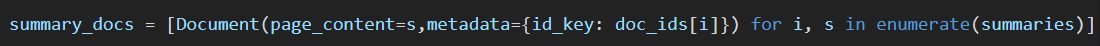

- store the summary vector of each document

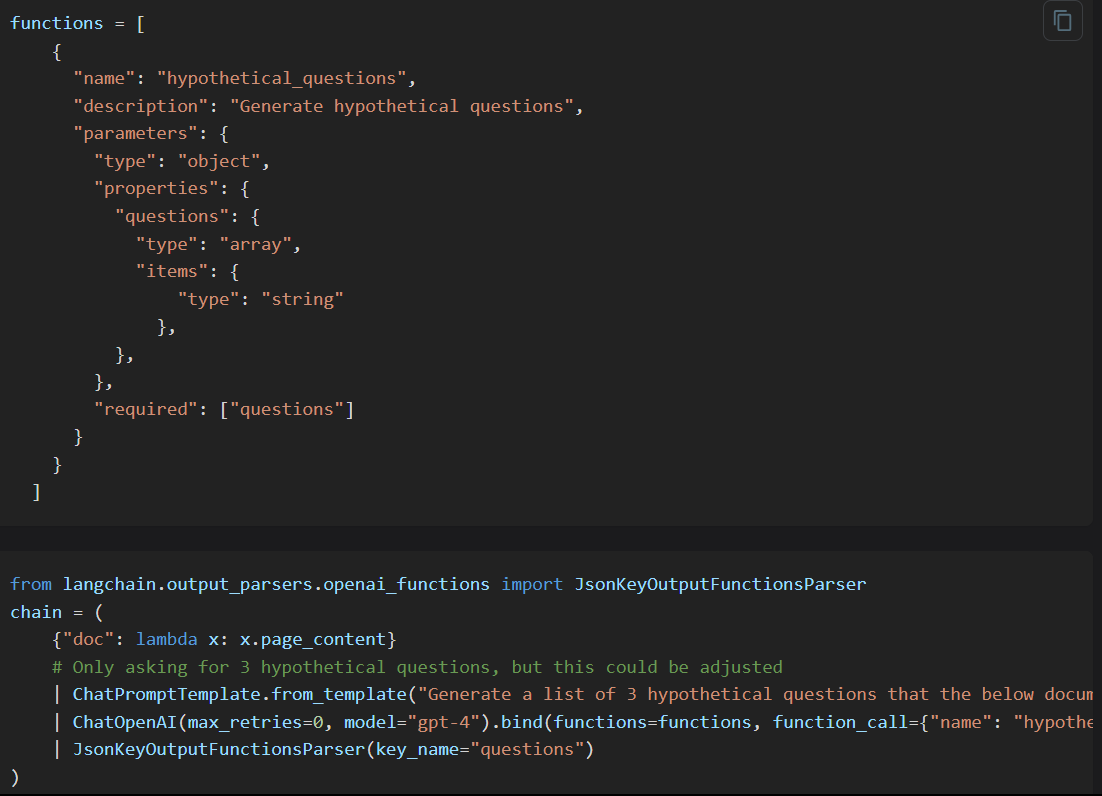

- store the vectors of hypothetical questions for each documents

- smaller chunks

- store the summary vector of each document

- store the vectors of hypothetical questions for each documents

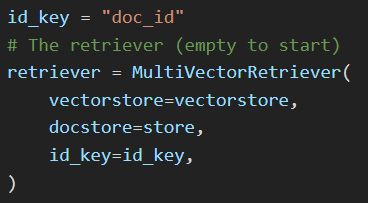

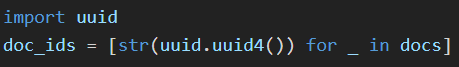

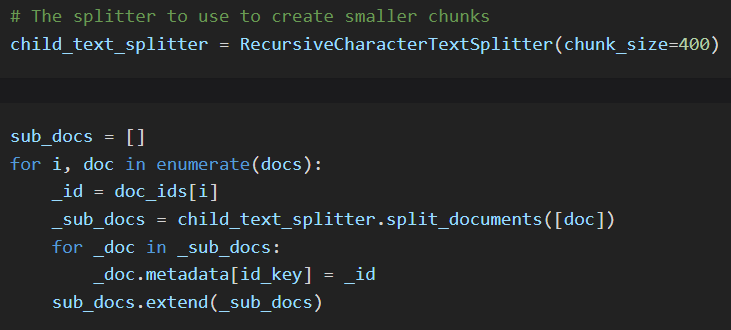

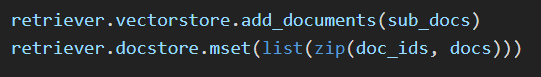

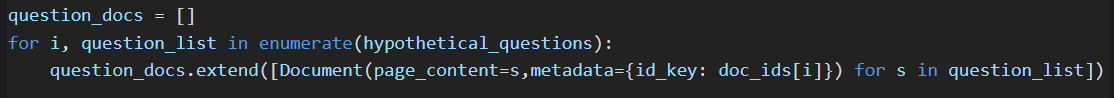

Now let's try to understand the example code from langchain documentation 👇

Based on the specific use case, we can create other vectors as well for each document.

For these vectors, we need to make sure to add the doc_id as the metadata. And MultiVectorRetriever will handle the rest to retrieve the initial documents from these vectors.

For these vectors, we need to make sure to add the doc_id as the metadata. And MultiVectorRetriever will handle the rest to retrieve the initial documents from these vectors.

Thanks for reading.

I write about AI, ChatGPT, LangChain etc. and try to make complex topics as easy as possible.

Stay tuned for more ! 🔥 #ChatGPT #LangChain

I write about AI, ChatGPT, LangChain etc. and try to make complex topics as easy as possible.

Stay tuned for more ! 🔥 #ChatGPT #LangChain

Loading suggestions...