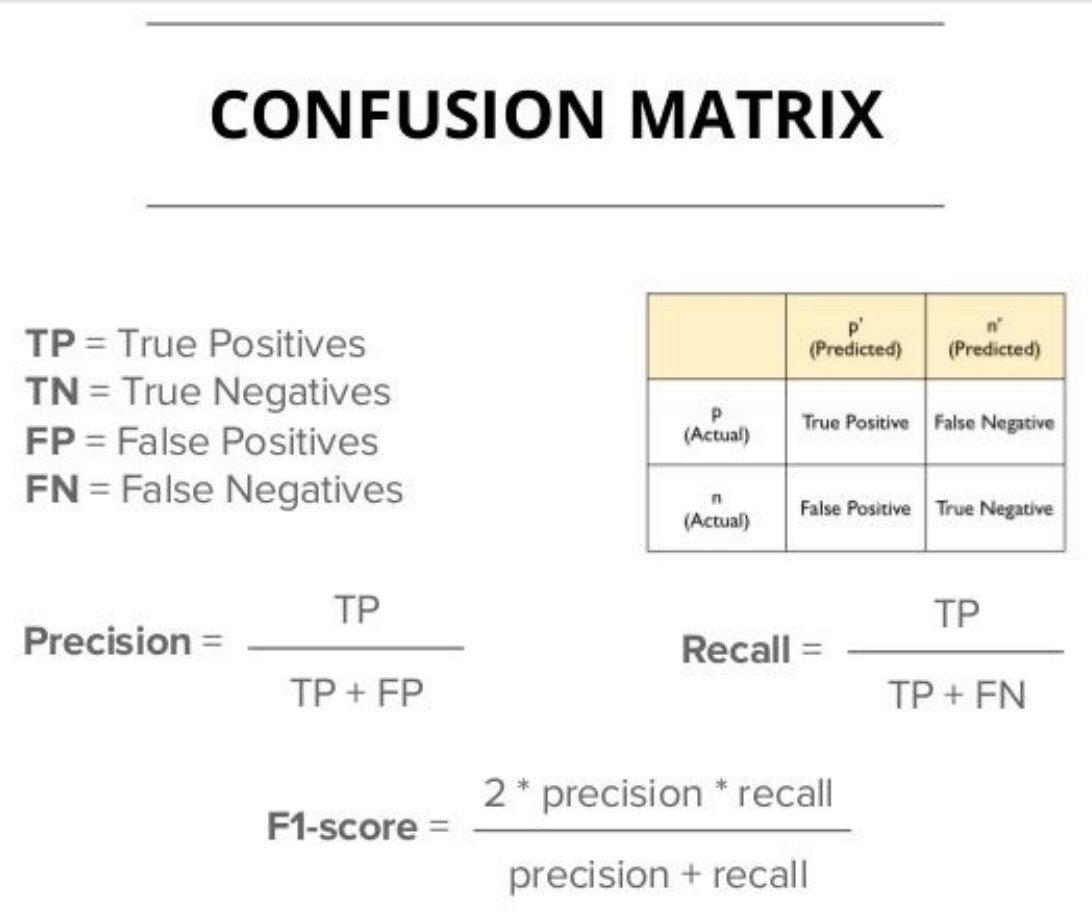

Recall is a measure of the model's ability to correctly identify all positive examples. It is calculated as the number of true positive predictions divided by the total number of positive examples in the dataset.

The F1 score is a weighted average of the precision and recall, and is often used as a single metric to evaluate the performance of a classification model. It is calculated as the harmonic mean of precision and recall, with a higher score indicating better performance.

It is important to consider both precision and recall when evaluating the performance of a classification model, as they can have trade-offs. For example, a model with high precision may have low recall, and vice versa.

The appropriate balance between precision and recall will depend on the specific task and the desired trade-off.

In some cases, it may be more important to have high precision, even at the expense of lower recall. In other cases, high recall may be more important, even at the expense of lower precision.

The F1 score can be used to balance precision and recall and find the optimal trade-off for a given task. It is particularly useful when the positive class is rare or when the cost of false positive and false negative errors is not equal.

Loading suggestions...