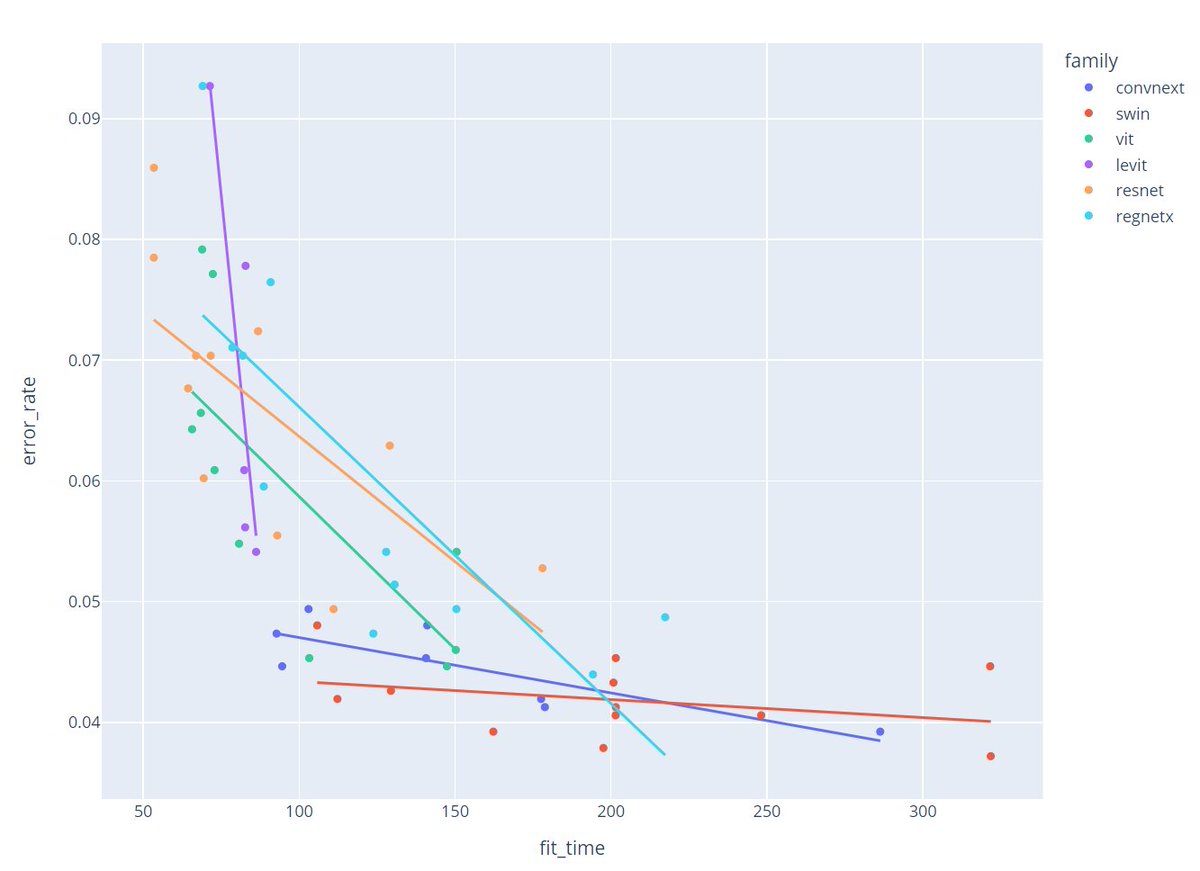

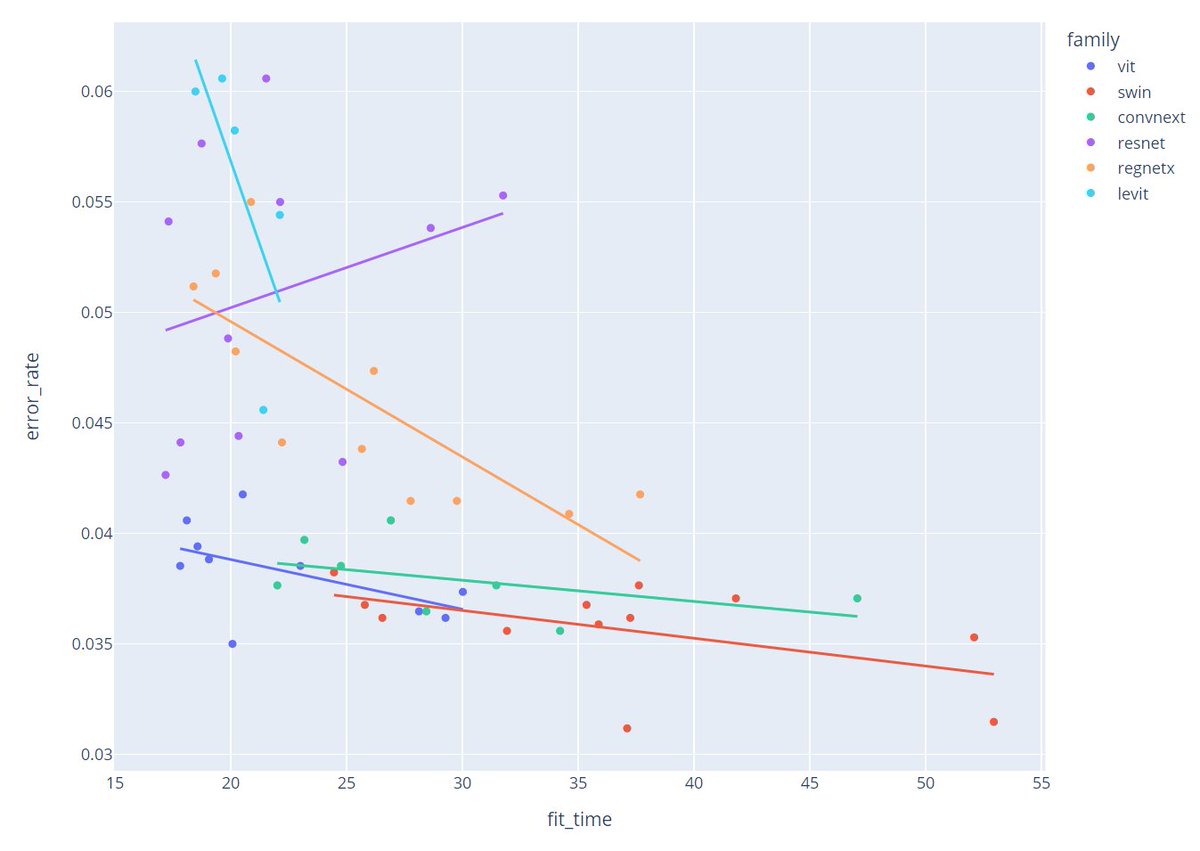

We now finally have the thing I've always wanted: an analysis of the best vision models for finetuning.

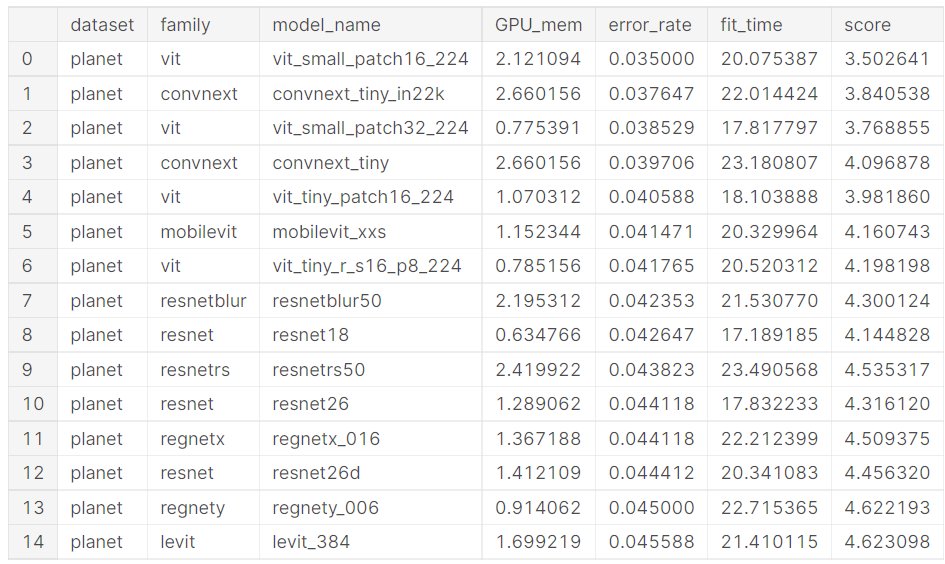

In joint work with @capetorch we tried nearly 100 models on two very different datasets across various hyperparams.

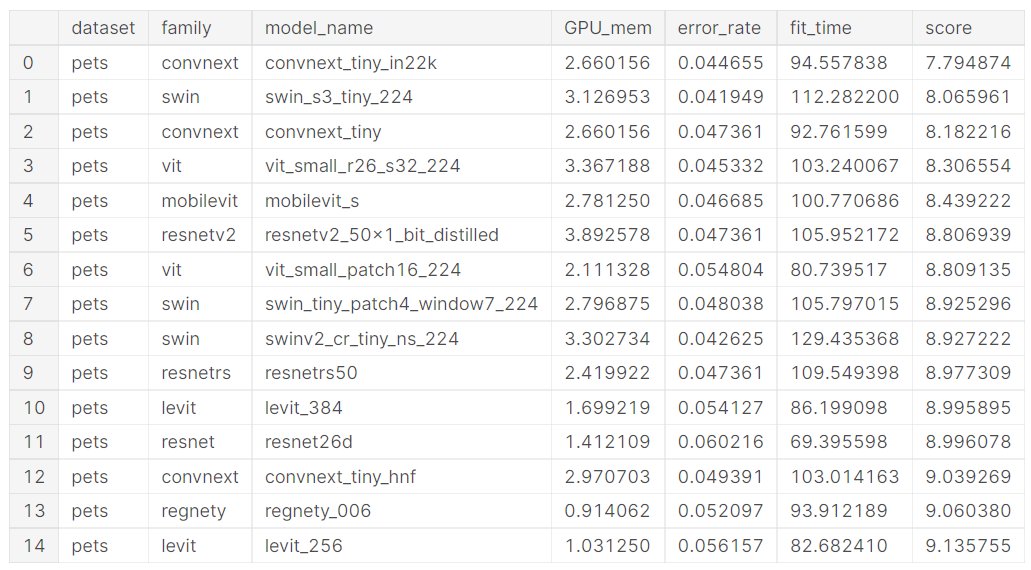

Here's the top 15 on the IIT-Pet dataset: 1/🧵

In joint work with @capetorch we tried nearly 100 models on two very different datasets across various hyperparams.

Here's the top 15 on the IIT-Pet dataset: 1/🧵

I've put together a writeup of the whole approach, and we've made the results and code publicly available to anyone who wants to dig in themselves:

kaggle.com

kaggle.com

جاري تحميل الاقتراحات...